Class Incremental Semantic Segmentation (CISS), within Incremental Learning for semantic segmentation, targets segmenting new categories while reducing the catastrophic forgetting on the old categories.

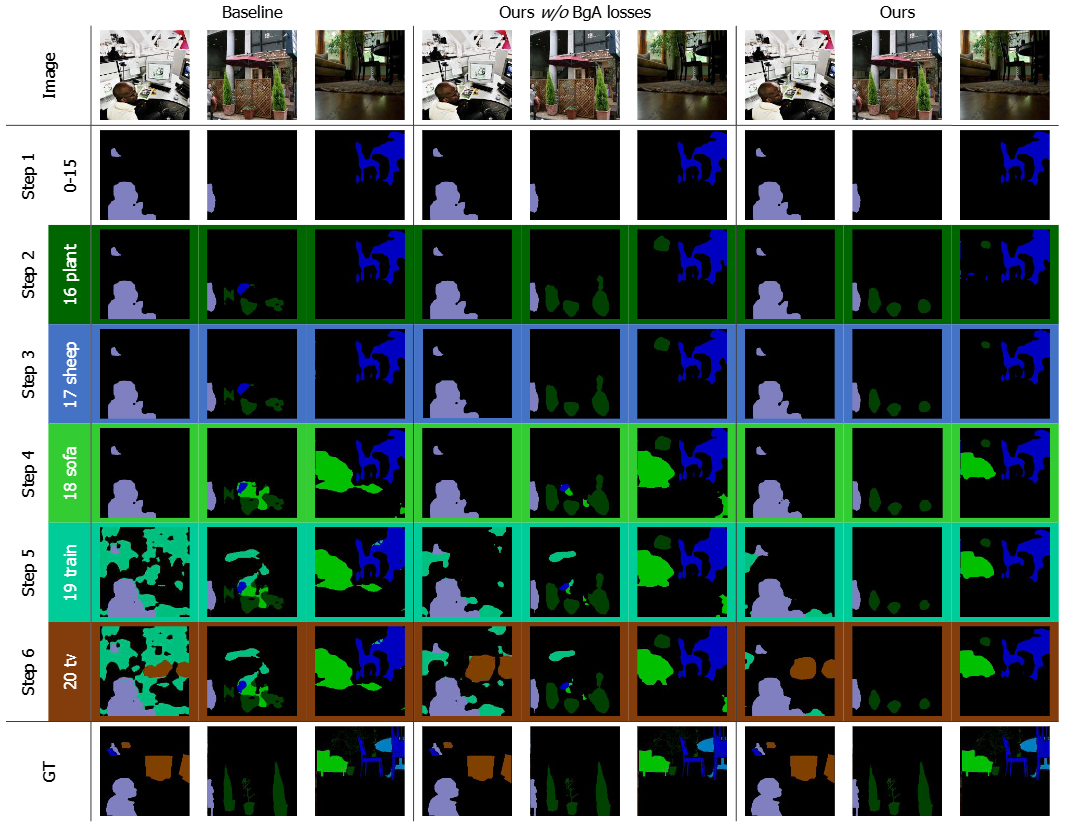

Besides, background shifting, where the background category changes constantly in each step, is a special challenge for CISS.

Current methods with a shared background classifier struggle to keep up with these changes, leading to decreased stability in background predictions and reduced accuracy of segmentation.

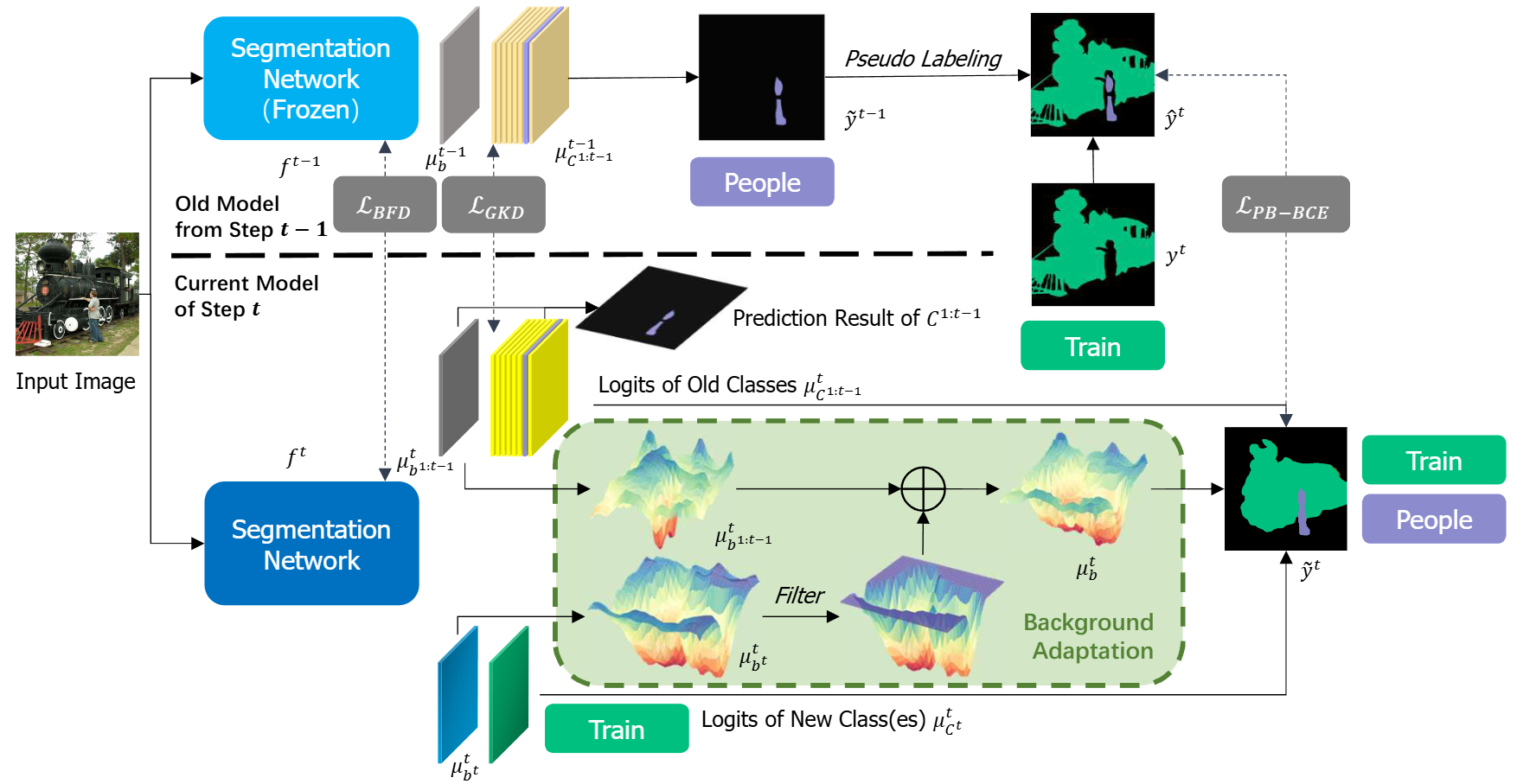

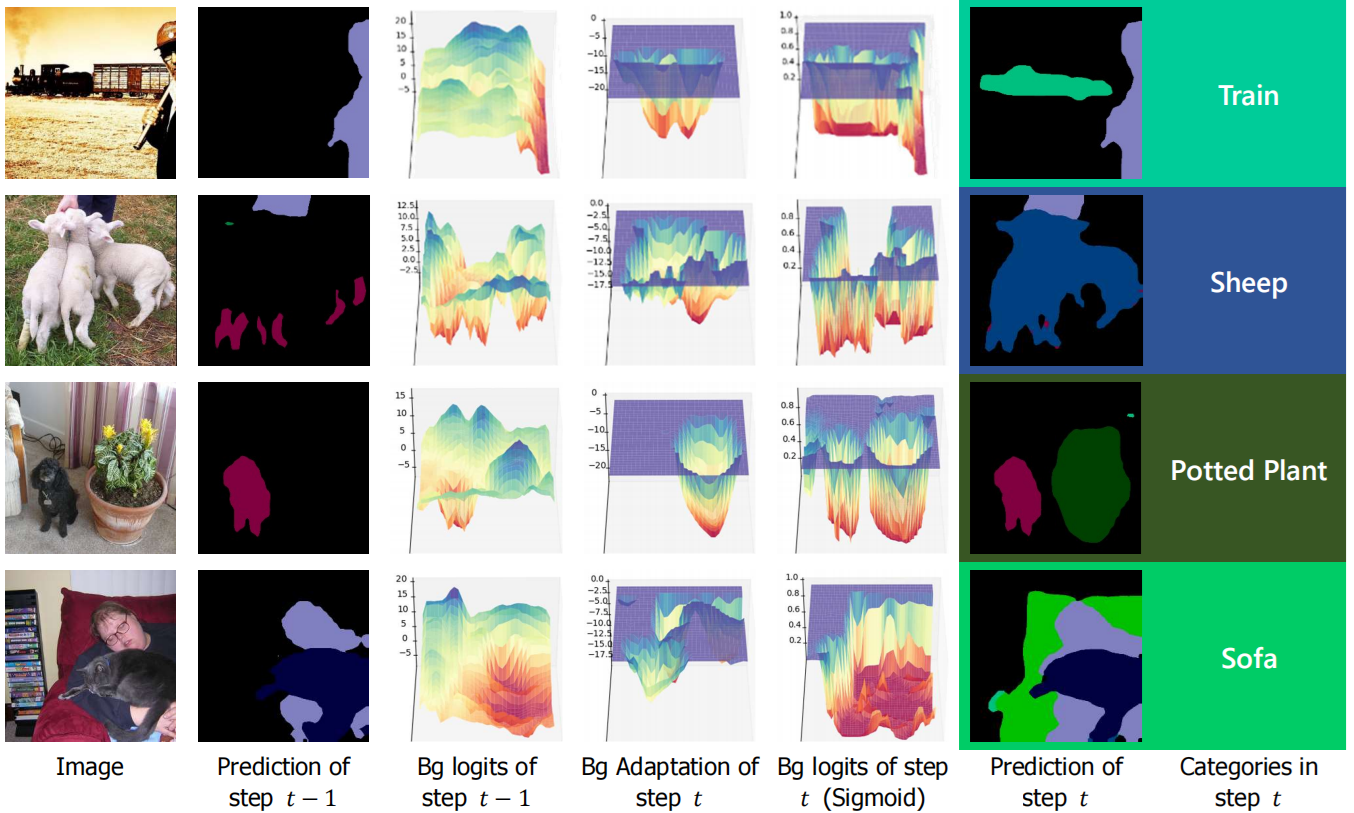

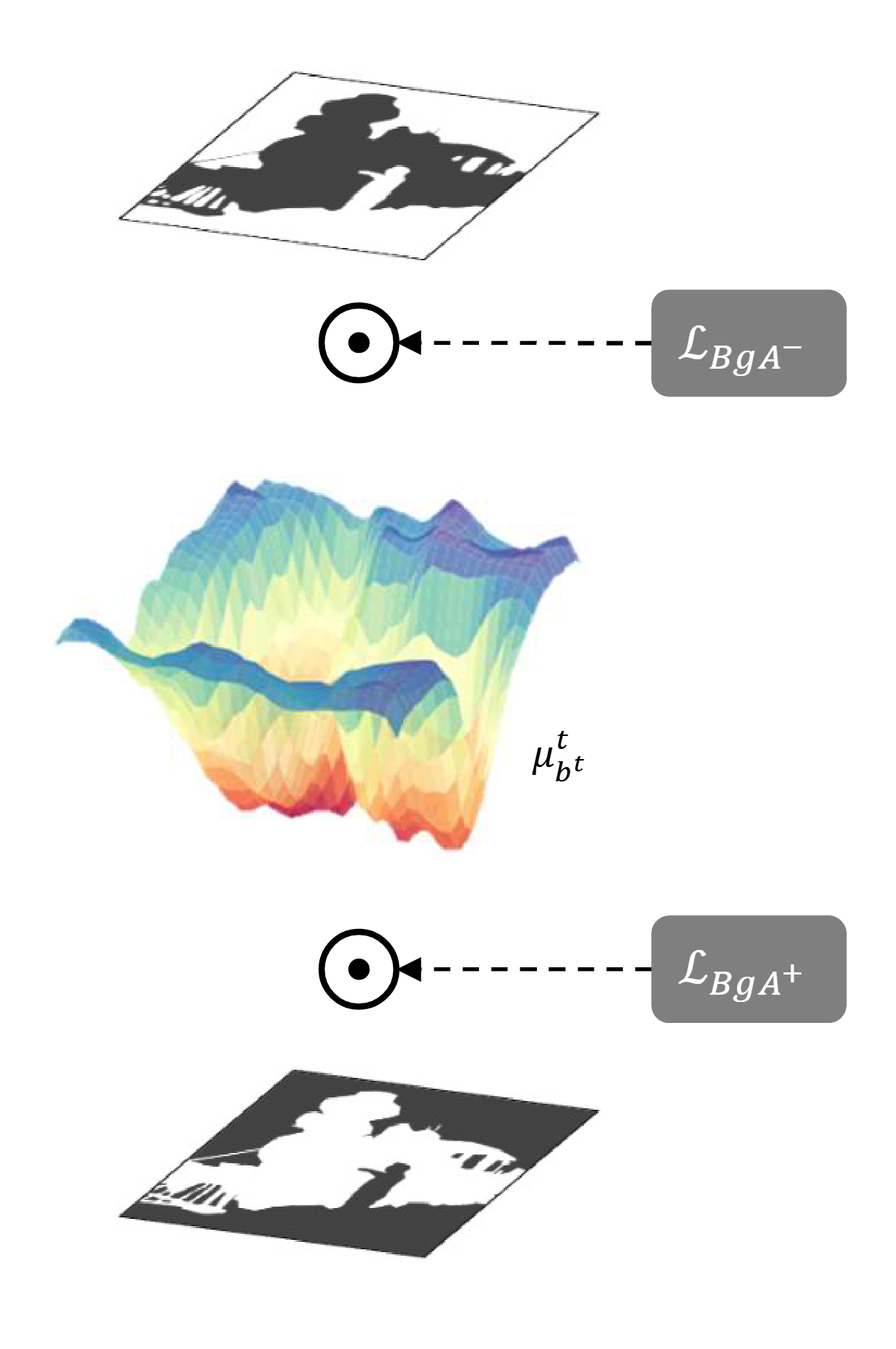

For this special challenge, we designed a novel background adaptation mechanism, which explicitly models the background residual rather than the background itself in each step, and aggregates these residuals to represent the evolving background.

Therefore, the background adaptation mechanism ensures the stability of previous background classifiers, while enabling the model to concentrate on the easy-learned residuals from the additional channel, which enhances background discernment for better prediction of novel categories.

To precisely optimize the background adaptation mechanism, we propose Pseudo Background Binary Cross-Entropy loss and Background Adaptation losses, which amplify the adaptation effect.

Group Knowledge Distillation and Background Feature Distillation strategies are designed to prevent forgetting old categories.

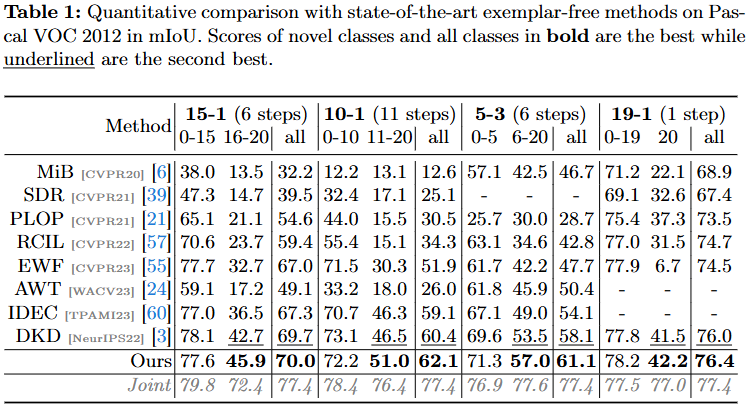

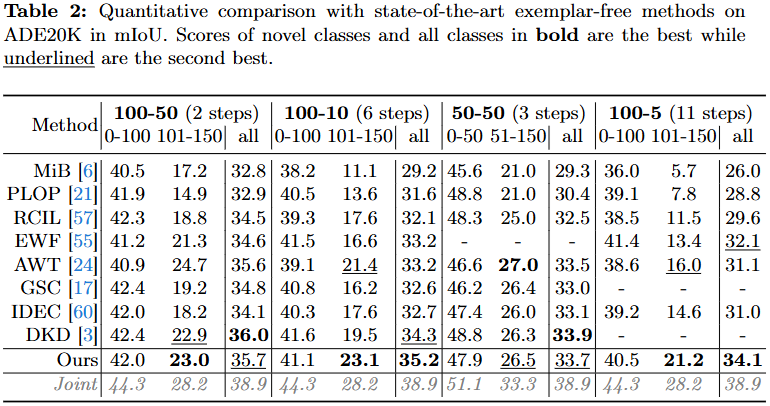

Our approach, evaluated across various incremental scenarios on Pascal VOC 2012 and ADE20K datasets, outperforms prior exemplar-free state-of-the-art methods with mIoU of 3.0% in VOC 10-1 and 2.0% in ADE 100-5, notably enhancing the accuracy of new classes while mitigating catastrophic forgetting.